|

Peano

|

|

Peano

|

Load balancing toolbox for Peano 4

Many extensions, such as ExaHyPE 2, not only use the load balancing toolbox, they also offer a tailored ExaHyPE 2 load balancing configuration, i.e. they provide means for users to employ the toolbox without interfering with the main functions or calling the load balancing manually. Indeed,the toolbox follows the philosophy that the chosen load balancing determines the load balancing strategy, while the configuration object tailors this strategy to your experimental setup and machine.

The toolbox's strategies typically have a

These are generic properties modelled as attributes of toolbox::loadbalancing::AbstractLoadBalancing. Each attribute is in turn a class of its own. They have all different jobs. Please consult the individual class documentations for further details.

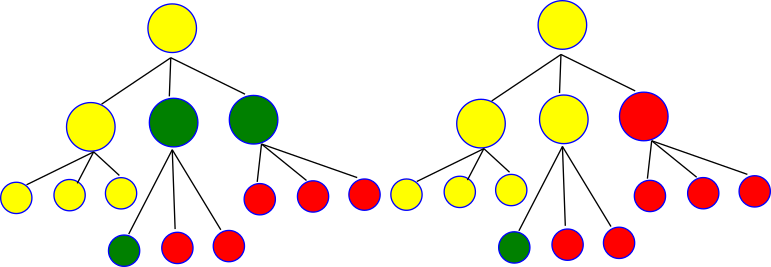

Besides "primitive" generic load balancing strategies realising one particular approach, you can also design cascades of load balancing strategies. Cascades are chains of different strategies. Whenever the Nth strategy comes to the conclusion that its load balancing has either terminated or stagnated, a cascade switches to the N+1th strategy to continue.

A lot of strategies have default settings. All the load balancing strategies work against some cost metrics metrics and the default here for example is loadbalancing::metrics::CellCount. But you can create your own cost metric and use this one instead, which might give you better balanced subdomains.

Of particular relevance is not only the decision when to split and where, but also how to split up the domain further. Peano supports two different split modes: bottom-up along the space-filling curve, and top-down within the spacetree. The latter approach is similar to recursive kd-decomposition. Each approach comes along with pros and cons. SFC-based partitioning makes it easier to have well-balanced domains, but the arising subdomain topology (who is adjacent to whom and what happens with coarser levels) can become tricky. Furthermore, you will have to know your mesh structure completely to construct good SFC subpartitions. Recursive top-down decomposition lends itself to domain decomposition which kicks in directly while we create the tree.

Not all strategies support both domain splitting schemes. You have to consult their documentation. Before you do so, I strongly recommend to read through Peano's domain decomposition remarks, and maybe even to read the paper

which is available through the gold access route.

Peano does not support rebalancing with joins. That is, once a domain is split into two parts, the cuts in-between these parts will stay there forever. Almost. If a domain A splits into A and B, and if B degenerates, i.e. hosts exclusively unrefined cells, then B can be instructed to join A again.

This strict commitments to tree-like joins and forks makes the implementation easier - notably in a multiscale context. You can still oversubscribe the threads, i.e. create more logical subpartitions than threads, and hence mimic diffusion-based load balancing for example.

Dynamic AMR is no problem for the domain decomposition. That is, a refined region can ripple or propagate through a domain cut. However, Peano will never deploy a cell to another tree if that cell hosts a hanging vertex and its parent cell is not deployed to the same tree, too. This constrains notably the SFC-based bottom-up splitting. You might encounter situations where the AMR effectively "stops" the SFC decomposition from adding further cells to a new domain. The reason for this policy is that the tracking of multiscale data consistency between hanging nodes on different trees otherwise becomes unmanageable hard.

Inappropriate or problematic domain decomposition decisions will always manifest in performance flaws. There are a few further resources that discuss generic details which are not tied to this toolbox. However, they are very useful to get the most out of this toolbox:

Further to the generic Peano discussions, individual extensions have their own specific discussion of how to analyse and to understand performance characteristics, which in turn again might relate to domain decomposition decisions:

While the toolbox offers some generic load balancing schemes, it will not be able to accommodate all applications. It notably will always lack domain-specific knowledge. However, there are generic tuning knobs and considerations. The page below summarises some generic rationale and lessons learned. Particular flaws and their fixes are discussed on the Peano optimisation page. So we get an explanation of things here, why things work the way they do. The recipes how to spot flaws and how to fix them are discussed on the generic page.

Every load balancing strategy in Peano 4 will have to balance two contradicting objectives:

The first argument is a strict one. If we run out of memory, the simulation will crash. The second argument is a desirable one.

We derive generic guidelines for any load balancing:

It can makes sense to take into account that aggressive top-down splitting can handle AMR boundaries, while bottom-up SFC cuts struggle (see implementation). Consequently, you might want to switch between these flavours, too: SFC-based partitioning is good if a mesh is rather regular.

As soon as you have a mesh with a lot of AMR in it, it can be advantageous to switch to top-down domain decomposition. SFC-based approaches struggle to create subdomains spanning AMR boundaries (for technical reasons). The to-down approach basically does recursive kd-partitioning and hence is way more robust w.r.t. AMR.

Peano favours - due to its name - the bottom-up SFC-based decomposition, but most load balancing flavours also have a variant which uses top-down splitting. Please consult all classes within toolbox::loadbalancing::strategies.

There is a dedicated subpage Load balancing strategies which describes load balancing with graph theoretic glasses on.