|

Peano

|

|

Peano

|

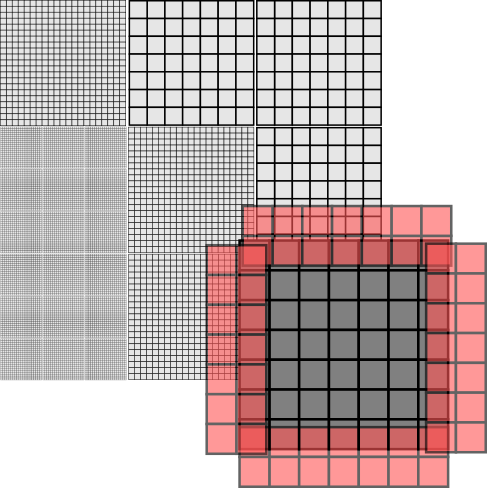

ExaHyPE's Finite Volume scheme is based upon block-structured Cartesian meshes. All Finite Volume solvers work with blockstructured AMR: They embed \(p \times p\) patches into the octants (cells of the spacetree). To avoid confusion, I prefer to use the term volume for the cells within the patch, as cell would be ambigous given that we construct the host mesh from spacetree cells.

With the Finite Volume methods, we only have single-shot methods at the moment. That is, Peano runs over the octants of the mesh, i.e. the patches. Per patch, it calls and ExaHyPE update kernel, which takes the patch and moves it forwards in time by a given \( \Delta t \). ExaHyPE is shipped with several update schemes. You can obviously also write your own numerical scheme manually, or you employ ExaHyPE's kernel domain-specific language (DSL).

Every patch has to depends upon its neighbouring, i.e. face-connected patches. To be able to do this, ExaHyPE passes a

\( (p+2N)^d \) patch into a kernel. The p is the number of volumes per patch. The d is the dimension, i.e. two or three. The \( N \geq 1 \) is a halo around the cell. Different Finite Volume schemes have different halo sizes. You may assume that ExaHyPE befills these halo values with valid data from the neighbours, i.e. their synchronisation is none of the kernel's business. The input is called \( Q_{in} \) from hereon. The kernel returns a \( (p+2 N)^d \) with the new time step's data. It is called \( Q_{out} \).

Here are some details about the data used within the kernels:

In Finite Volumes, we assume that each voxel holds exactly one value per PDE quantity. We assume that the solution is constant within the volume.

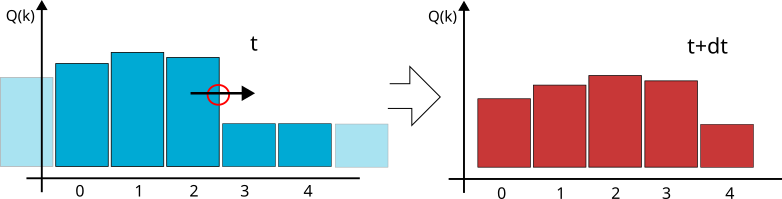

In the 1d illustration above, we have a patch with \( p=5 \) volumes, one quantity and a halo of one. That is, we are given a \( Q_{in} \) with 7 values and return 5 new values for the next time step. So now we do the math:

If the solution per volume is constant, nothing happens within the volume per time step. The solution inside is kind of "stable". If something happens with a volume, i.e. if the solution grows or goes down, then this must be due to stuff flowing in or out of the voxel. We can write this down as integral over each volume V:

\( \int _V Q_k(t+\Delta t) dx = \int _V Q_k(t) dx + \Delta t \cdot \int _V \partial _t Q_k(t) dx \)

We have already used an explicit Euler time integrator here. Next, we make the formula a little bit simpler exploiting the fact that a volume shall have the volume length \( h \) and each volume is a square or cube, respectively. Once again, it helps that the solution is constant per volume.

\begin{eqnarray*} \int _V Q_k(t+\Delta t) dx & = & \int _V Q_k(t) dx + \Delta t \cdot \int _V \partial _t Q_k(t) dx \\ h^d Q_k(t+\Delta t) & = & h^d Q_k(t) dx + \Delta t \cdot \int _V \partial _t Q_k(t) dx \\ h^d Q_k(t+\Delta t) & = & h^d Q_k(t) dx + \Delta t \cdot \int _V \nabla F(Q_k(t)) + S(Q_k) dx \end{eqnarray*}

In the last line, we now insert our PDE written as

\( \partial _t Q(t) = \nabla F(Q(t)) + S(Q) \)

and use the divergence theorem:

\begin{eqnarray*} h^d Q_k(t+\Delta t) & = & h^d Q_k(t) dx + \Delta t \cdot \int _V \nabla F(Q_k(t)) + S_k(Q) dx \\ Q_k(t+\Delta t) & = & Q_k(t) dx + \frac{\Delta t}{h^d} \cdot \left( \oint _{\partial V} (F(Q_k(t)),n) dS(x) + h^d \cdot S(Q_k) \right) \end{eqnarray*}

So in principle, we now know exactly what to do:

The problem is that we don't know the F value at the voxel. That thing doesn't really exist. The solution has no value there, as it jumps. So we have to approximate this term \( F(Q) \) at the voxel boundary with a numerical flux.

One of the simlest flux is Rusanov:

\( {F|}_{n}(Q) \approx \frac{1}{2} (F_n^+(Q) + F_n^-(Q)) - (Q^+ - Q^-) \cdot \text{max}(\lambda_{max,n}(Q^+),\lambda_{max,n}(Q^-)) \)

The flux on a face \( {F}_{n}(Q) \) is the average of the flux within the left adjacent cell and the right adjacent cell. This average is denoted by a red circle in the sketch above. If we only used the average, we would get a solution where shocks smear out quickly. In the sketch above, the wave/shock travels from left to right, so it makes sense to assume that actually way more flow goes left-right than right-to-left. Averaging is not a good idea. So we take the difference \( Q^+ - Q^- \), we know how to correct the numerical flux. Take into account that the "remaining" eigenvalues are all positive for a stricly hyperbolic system. Globally, there is one negative eigenvalue but that one corresponds to the time dimension and is already taken care of.

Yet, the speed matters: If the flow is very slow, then we only should correct the average by a tiny amount. If the wave travels very fast, we should significantly take this solution gradient into account. The wave speed it approximated/bounded by the maximum eigenvalue along the direction of interest. Again, we cannot evaluate the maximum eigenvalue on the face directly, but we only are interested in the maximum eigenvalue. Therefore, we can evaluate the directional eigenvalue in the left and right volume and again take the maximum of those guys.

Our discussion of an implementation of the scheme - which can be read as blueprint of our compute kernels in ExaHyPE - is inspired by

who write down the algorithm as a sequence of tensor multiplications, i.e. generalised matrix-matrix equations. This does not work in ExaHyPE 1:1 as we support non-linear PDEs in the generic kernel formulations. However, we can use similar operator concatenation ideas.

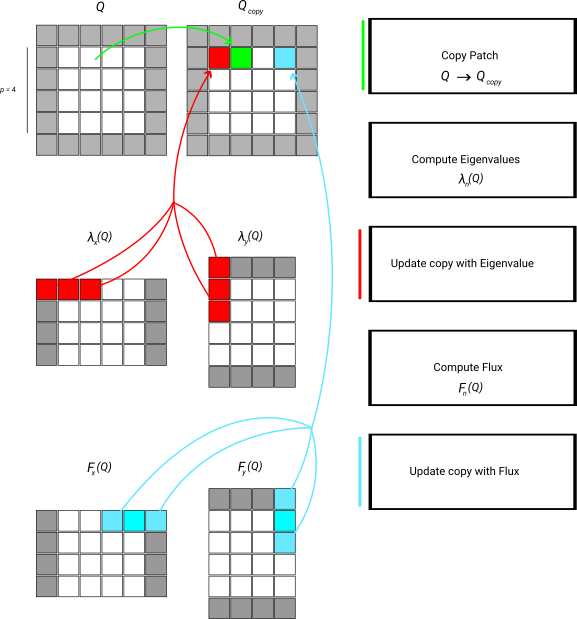

We demonstate the data flow by means of the simplified numerical flux which only takes the average. This algorithm consists of a strict sequence of steps:

Now all temporary data are available. We prepare the output and then add the PDE contributions. This part is basically a stencil variant. Or we can write it down as a tensor-vector product which is added to \( Q_{out} \):

The extension to Rusanov for example is straightforward, as long as we take into account that our "stencil" here has to incorporate the max function. Below is schematic illustration of the data flow:

Note that the scheme as well as the description lack an important detail: Every single step is to be done over a set of patches, as we update multiple patches in one go. In the kernels, we can write down this logic as a sequence of operators, but once you employ our xDSL kernel language, the underlying kernel generator will permute and fuse all the loops and operations.