|

Peano

|

|

Peano

|

Construct the Finite Volume (limiter) scheme. More...

Public Member Functions | |

| __init__ (self, name, patch_size, bool amend_priorities, KernelParallelisation parallelisation_of_kernels) | |

| Construct the limiter. | |

| create_action_sets (self) | |

| Not really a lot of things to do here. | |

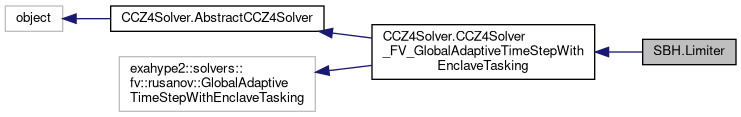

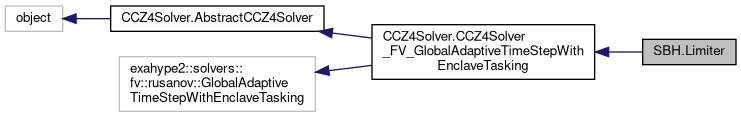

| Public Member Functions inherited from CCZ4Solver.CCZ4Solver_FV_GlobalAdaptiveTimeStepWithEnclaveTasking | |

| __init__ (self, name, patch_size, min_volume_h, max_volume_h, pde_terms_without_state) | |

| Construct solver with enclave tasking and adaptive time stepping. | |

| add_tracer (self, name, coordinates, project, number_of_entries_between_two_db_flushes, data_delta_between_two_snapsots, time_delta_between_two_snapsots, clear_database_after_flush, tracer_unknowns) | |

| Add tracer to project. | |

| Public Member Functions inherited from CCZ4Solver.AbstractCCZ4Solver | |

| __init__ (self) | |

| Constructor. | |

| enable_second_order (self) | |

| add_all_solver_constants (self) | |

| Add domain-specific constants. | |

| add_makefile_parameters (self, peano4_project, path_of_ccz4_application) | |

| Add include path and minimal required cpp files to makefile. | |

Data Fields | |

| str | enclave_task_priority = "tarch::multicore::Task::DefaultPriority+1" |

| Data Fields inherited from CCZ4Solver.AbstractCCZ4Solver | |

| dict | integer_constants |

| dict | double_constants |

Protected Member Functions | |

| _store_cell_data_default_guard (self) | |

| Mask out exterior cells. | |

| _load_cell_data_default_guard (self) | |

| _provide_cell_data_to_compute_kernels_default_guard (self) | |

| _provide_face_data_to_compute_kernels_default_guard (self) | |

| _store_face_data_default_guard (self) | |

| _load_face_data_default_guard (self) | |

| Protected Member Functions inherited from CCZ4Solver.AbstractCCZ4Solver | |

| _add_standard_includes (self) | |

| Add the headers for the compute kernels and initial condition implementations. | |

Protected Attributes | |

| _fused_compute_kernel_call_cpu | |

| _name | |

Additional Inherited Members | |

| Static Public Attributes inherited from CCZ4Solver.AbstractCCZ4Solver | |

| float | Default_Time_Step_Size_Relaxation = 0.1 |

| Static Protected Attributes inherited from CCZ4Solver.AbstractCCZ4Solver | |

| dict | _FO_formulation_unknowns |

| dict | _SO_formulation_unknowns |

Construct the Finite Volume (limiter) scheme.

We assume that the underlying Finite Differences scheme has a patch size of 6x6x6. To make the Finite Volume scheme's time stepping (and accuracy) match this patch size, we have to employ a 16 times finer mesh.

It is interesting to see that the limiter does not really have a min and max mesh size. The point is that the higher order solver dictates the mesh structure, and we then follow this structure with the Finite Volume scheme.

| SBH.Limiter.__init__ | ( | self, | |

| name, | |||

| patch_size, | |||

| bool | amend_priorities, | ||

| KernelParallelisation | parallelisation_of_kernels ) |

Construct the limiter.

patch_size: Integer Pass in the patch size of the FD4 scheme or, if you are using RKDG, hand in the number 1. The Finite Volume patch then will be 16 times finer.

Definition at line 62 of file SBH.py.

References __init__(), and CCZ4Solver.AbstractCCZ4Solver.double_constants.

Referenced by __init__().

|

protected |

Definition at line 145 of file SBH.py.

References _load_cell_data_default_guard(), and _name.

Referenced by _load_cell_data_default_guard().

|

protected |

Definition at line 174 of file SBH.py.

References _load_face_data_default_guard(), and _name.

Referenced by _load_face_data_default_guard().

|

protected |

Definition at line 154 of file SBH.py.

References _name, and _provide_cell_data_to_compute_kernels_default_guard().

Referenced by _provide_cell_data_to_compute_kernels_default_guard().

|

protected |

Definition at line 163 of file SBH.py.

References _provide_face_data_to_compute_kernels_default_guard().

Referenced by _provide_face_data_to_compute_kernels_default_guard().

|

protected |

Mask out exterior cells.

Definition at line 131 of file SBH.py.

References _name, and _store_cell_data_default_guard().

Referenced by _store_cell_data_default_guard().

|

protected |

Definition at line 169 of file SBH.py.

References _name, and _store_face_data_default_guard().

Referenced by _store_face_data_default_guard().

| SBH.Limiter.create_action_sets | ( | self | ) |

Not really a lot of things to do here.

The only exception that is really important is that we have to ensure that we only solve stuff inside the local domain of the FV. By default, ExaHyPE 2 solves the PDE everywhere. If data is not stored persistently or loaded from the persistent stacks, it still solves, as it then would assume that such data arises from dynamic AMR. In this particular case, we have to really mask out certain subdomains.

It is not just a nice optimisation to do so. It is absolutely key, as the application of the compute kernel on garbage would mean that we end up with invalid eigenvalues.

Definition at line 179 of file SBH.py.

References _name, and create_action_sets().

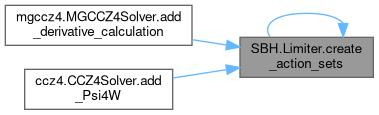

Referenced by mgccz4.MGCCZ4Solver.add_derivative_calculation(), ccz4.CCZ4Solver.add_Psi4W(), and create_action_sets().

|

protected |

|

protected |

Definition at line 166 of file SBH.py.

Referenced by _load_cell_data_default_guard(), _load_face_data_default_guard(), _provide_cell_data_to_compute_kernels_default_guard(), _store_cell_data_default_guard(), _store_face_data_default_guard(), CCZ4Solver.CCZ4Solver_FD4_SecondOrderFormulation_GlobalAdaptiveTimeStepWithEnclaveTasking.add_tracer(), and create_action_sets().

| str SBH.Limiter.enclave_task_priority = "tarch::multicore::Task::DefaultPriority+1" |