|

Peano

|

|

Peano

|

If you want to solve systems of PDEs where some PDEs advance on different time scales, solve different problems, are active in different domains, and so forth, you have two options on the table: You can model the solvers as one big system or you can logically code the solvers as two totally separate things and couple them. If you have to run some postprocessing, you also have two options: Either make the postprocessing a "real" postprocessing by adding it as postprocessing step to your solvers of choice, or you add separate mesh sweeps which perform the postprocessing.

The former version is faster, as you read/write data only once and you do not impose additional mesh traversals. The latter version is the way to go if you need some boundary exchange prior such that halos, for example, are up-to-date. It might also be appropriate if you want to couple the hyperbolic solvers to other solvers, such as an elliptic term or a solid in a fluid-structure interaction scenario. When it comes to the coupling of two solvers, the first approach sketched is simple: If the two solvers feature \( N_1 \) and \( N_2 \) unknowns, you simply create one new solver with \( N_1+N_2 \) unknowns and you provide flux functionsm, ncps, ...for all of the components. The big disadvantage of this approach is that all solvers have to advance in time at the same speed, that you might replicate code if you have already two existing solvers, and that you also might run a lot of unneeded calculations if only some PDE equations evolve actually in time. Technically, the implementation of this variant however is straightforward and we will not discuss it further here, i.e. our description from hereon assumes that you work with two solvers and/or separate mesh sweeps.

Using multiple solvers within ExaHyPE is, in principle, straightforward, too. You create two solver instances and you add them to your project. Both solvers then will be embedded into the mesh and run independently. They will hence interact through the adaptive mesh refinement - if one solver refines, the other one will follow - but otherwise be totally independent. While this approach allows us to have a clear separation-of-solvers, the coupling of the two systems is not that straightforward. And we have to couple them if we want to solver our initial setup phrased as system of PDEs.

How to couple the PDEs depends upon the fact if you want to directly project the solutions onto each other of if you make one PDE feed into the other one via the right-hand side (or in general some "material" parameters within its PDE other than the evolution unknowns). If you coupld PDEs directly, you can use your PDE solvers as they stand.

To prepare the system of PDEs in the other case, I recommend to add new material (auxiliary) parameters to each PDE which contain the cooupling term. That is, if you have two solver A and B and B has an impact on A through a scalar and A influences B through a vector, thend you add one material parameter to A and three to B.

Those material parameters are not material parameters in the traditional sense. They change over time. B will set the material parameters of A and vice versa.

There are two places where we can add coupling, i.e. map solution data onto each other: It can either be a preprocessing step of the time step or a postprocessing step. While, in principle, both approaches do work here, there's a delicate difference: In the pre-processing step, we have access to the patch data plus the halo. In the post-processing step, we have only access to the patch content.

We note that we exclusively discuss volumetric mapping in this section. This is the only coupling we support at the moment within ExaHyPE, i.e. we do not support mapping of face data onto each other (anymore). Besides volumetric coupling, you can obviously "only" couple global properties with each other. This is however similar to the time step synchronisation as discussed below and therefore not subject of further discussion.

Unless you use fixed time stepping schemes with hard-coded time step sizes that match, any two solvers with ExaHyPE run at their own speed. Even more, each individual cell runs at its own speed and hosts its own time stamp. For local timestepping, different cells may advance at different speed in time. For adaptive timestepping, all cells of one solver will advance with the same time step size.

For most solvers, it is therefore sufficient to plug into getAdmissibleTimeStepSize() of the superclass. Overwrite it, call the superclass' variant and then alter the returned value.

This is the approach used in Euler example with self-gravitation via the Poisson equation. If you want to use local time stepping or run checks on the timestamp per cell, you will have to alter the cell's meta data holding its time stamp. Things in this case become more involved, i.e. you will have to study the underlying action sets and change the generated source code in there.

ExaHyPE couples volumetrically. That does not mean that all solvers have to be solved everywhere. Indeed, the idea to offer support for multiple solvers arose originally from the a posteriori limiting of Dumbser et al where a Finite Volume solver complements ADER-DG solutions where shocks arise.

Most projects that follow these rationale realise the same pattern: We define two different solvers, and we couple them with each other. The coupling (and solve) however are local operations:

We will effectively end up with a domain decomposition where parts of the computational domain are handled by the higher-order solver and the other parts are handled with FV. To realise this, we need a marker or oracle, i.e. a magic function per cell which tells us, similar to a multi-phase flow, which solver rules in this particular cell. The marker will guide us which solver is "valid" in which cell, where one solver overrules the other one, and where we do not have to store one solver's data or the other.

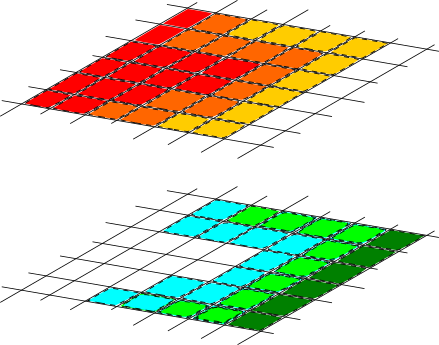

The image below illustrates the core idea behind typically coupling approaches in ExaHyPE. The sizes of the overlap might differ, but the principle is always the same. The illustration shows the minimal overlap that we can typically use. Both the upper and the lower mesh illustrate the same mesh. I use colours to show which solver exists in which mesh cell.

Starting from this description, sophisticated coupling equations can be realised, where you exploit the fact that we have a volumetric overlap.

Skip this section if you prefer a hard-coded marking of active regions.

Once you know where a solver is valid, it makes sense also to only store the solver's data in the valid region. Otherwise, a lot of memory is "wasted" which furthermore will be moved/accessed in each and every grid sweep, as the mesh management of Peano so far does not know that ExaHyPE might not need the data. ExaHyPE has to let Peano know.

The technical principles behind such a localisation are discussed on a generic Peano page of its own. ExaHyPE builds up pretty deep inheritance hierarchy where every subclass specialises and augments the basic numerical scheme, and also implements additional guards when data is stored or not. However, most of these subclasses rely on only six routines to control the data flow:

By default, most solvers only store data for unrefined cells. They mask coarser levels with in the tree out.

Subclasses such as enclave tasking variations augment the guards further such that not all data are stored all the time. Nothing stops you from adding further constraints and to use a solver only in a certain subdomain for example.

For the actual solver coupling, four hook-in points are available:

These steps all have differen properties. The global preprocessing precedes any solver routine, i.e. it is called before any compute kernel on any solver is launched. It also is guaranteed to run sequentially, i.e. there is nothing running in parallel to the preprocess actino set. For the postprocessing, similar arguments hold. These steps come after all solvers have updated their solution. The kernel hook-ins (2) and (3) in contrast can run in parallel.

Any coupling can be arbitrary complicated, but there are several off-the-shelf routines offered in toolbox::blockstructured. Interesting variants include toolbox::blockstructured::copyUnknown() and ::toolbox::blockstructured::computeGradientAndReturnMaxDifference(). Besides those guys, you might also want to use the AMR routines within the blockstructured toolbox and the ExaHyPE folders, where you find, for example, linear interpolation and restriction.

Important: If you couple two solvers, you have to carefully take into account